Man vs Machine: How AI Is Upending the Creative Process

A new wave of tech companies are building AI-powered tools for creatives. How do they compare to the human touch?

- Creatives, including designers, illustrators and filmmakers, are increasingly working alongside AI tools.

The Oxford English Dictionary defines ‘creativity’ as “the use of imagination or original ideas to create something.” Creativity has been considered a uniquely human quality, but recent advances in artificial intelligence (AI) have seen a new wave of AI-powered tools emerge, promising to make creative content faster and easier.

Industry analyses suggest that the majority of creatives have already adopted AI tools, and the more commercial corners of the creative industries are most likely to be using the tech. For example, one recent survey by industry publication It’s Nice That found that 83 percent of creatives have already adopted AI. Among the respondents, 55 percent of those who work in-house at creative agencies and studios had used AI tools in the past week. But at the same time, 38 percent of solo artistic practitioners and 27 percent of self-employed freelancers said they’d never used AI at all in their work.Adoption among creatives has followed the fastest-developing tech. In the last couple of years, the most rapid advancements have been made in developing text-to-image generative AI models by large tech companies, such as OpenAI’s DALL-E, Stability AI’s Stable Diffusion and Microsoft’s Bing.

These platforms use generative artificial intelligence models that produce unique images from text and image prompts that aim to be photorealistic.

“The areas of the creative industries that seem to have been adopting AI the fastest are those that engage with visual design and graphic arts and the advertising and marketing industries,” says Caterina Moruzzi, Chancellor’s Fellow in Design Informatics at the University of Edinburgh. Moruzzi’s research into the integration of AI tools into creative practices has found that the moving image sector, film and animation, is rapidly following suit. “AI technologies for temporally extended mediums like video present more challenges, such as consistency through time, but companies are starting to invest more in this sector,” Moruzzi says.

Video generator case study: Synthesia

One such company is Synthesia, a London-based startup that’s built a platform that allows users to create customizable video content from text prompts. Launched in 2020, Synthesia’s B2B platform aims to replace text-based communication with video, enabling anyone within a company to create video content without prior video production knowledge — saving on employee resources and production costs.

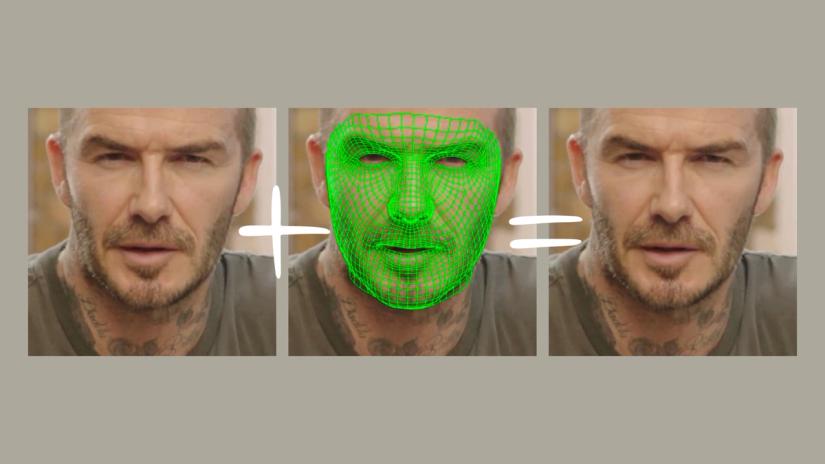

Synthesia has developed a generative AI model that creates and animates human-like AI avatars that present a script that users type into its platform. The firm has 50,000 paying customers globally, ranging from small businesses to large enterprises with thousands of employees on their account. Synthesia counts 60 percent of the Fortune 100 among its customers, the company says. “Back in 2020, the first versions of these avatars were a bit robotic, but now I’d say they’re about 95 to 99 percent indistinguishable from a real person,” says Alexandru Voica, Synthesia’s head of corporate affairs and policy.

It’s that 5 percent difference that still makes watching a video generated by Synthesia a bit jarring to the human eye. While this may not matter too much for internal communications or an HR training video, Synthesia’s platform is not quite up to a more creative endeavor like an external TV advertising campaign.

“Our goal is not to replace creative agencies or traditional video teams, but to enable business users inside companies to communicate with video, rather than text,” Voica says. Meanwhile, larger companies are trying to hone their models for the more creative space. OpenAI, for example, is reportedly courting Hollywood studios with its new AI video generator Sora, which it’s hoping to encourage filmmakers to integrate into their work. Meta and Alphabet have also released text-to-video research projects, while the best-funded AI startups in the space like Stability AI and Runway AI are fine-tuning their more creative models, too.

The creativity spectrum

Synthesia and OpenAI are attempting to replicate human outputs from opposite ends of what Voica describes as the ‘creativity spectrum’. On the one hand, Synthesia’s platform could be criticized as lacking a human-like imagination because it’s very deterministic: no matter how many times you generate a video using the same script, your avatar will always sound and look the same. On the other hand, OpenAI’s platform is so creative that every time you type in the same prompt to its large language model, the video output will be slightly different – what Voica dubs a “video slot machine”.

This process of trial and error is fine for creative professionals with time to experiment, but less appropriate for those working to tighter deadlines in more of a business environment.

This issue of the creativity spectrum is currently shared by text-to-image generators, as well as AI-generated music. So far, AI models can’t quite replicate authentic human creative output, so are seen by the creative industries as more of a collaborative tool. Among creatives, there’s also a strong argument for keeping it that way. “Creatives’ excitement about the potential benefits contrasts with concerns over their impact on agency and control,” Moruzzi says. “This requires designing systems that are collaborative rather than prescriptive, empowering users to take creative control rather than becoming passive operators of AI-driven workflows.”

As with all industries, the biggest question is whether these rapidly-advancing AI models will kill – or drastically alter – creatives’ jobs. Although a common narrative around the benefits of using AI in the creative workflow is based on the assumption that AI increases process efficiency, Moruzzi’s research shows that the time AI helps save creatives on some of their tasks is not then made available for them to concentrate on more creative aspects of the workflow they may enjoy.

“Efficiency is the value that is usually associated with the use of AI in the creative workflow, but efficiency is not necessarily a value for arts and creativity – it’s quite the opposite. Knowing the effort and time that went into developing a product is often what makes it valuable – the origin of the work, the history behind it, and the history of the maker. That’s not something that AI can replace,” Moruzzi says.

Ethical data collection

Another key issue the industry is grappling with is ethical data collection. AI systems that deliver personalized content often rely on huge volumes of user data to tailor outputs, which raises significant concerns around data privacy as well as intellectual property (IP).

Creatives, including Hollywood screenwriters, actors, songwriters and journalists, have taken strike action and lobbied for safeguards and licenses to prevent misuse of their existing creative work, properly attribute credit, and compensate the creator.

But so far, there is no unified approach towards IP or regulations for these AI tools.

According to Moruzzi, the biggest threat to these machines’ potential creativity is ‘model collapse’ – a phenomenon where AI models increasingly trained on data generated by other AI models experience a decline in quality (i.e., diversity and creativity) over time.

One major way to prevent model collapse is to ensure these AI tools are trained on good quality, human-generated data. For now, this means creative professionals’ input is essential: the industry can only advance as far as human creatives allow.

ThinQ by EQT: A publication where private markets meet open minds. Join the conversation – [email protected]